Parallelism on Dinamica EGO

What is Parallelism?

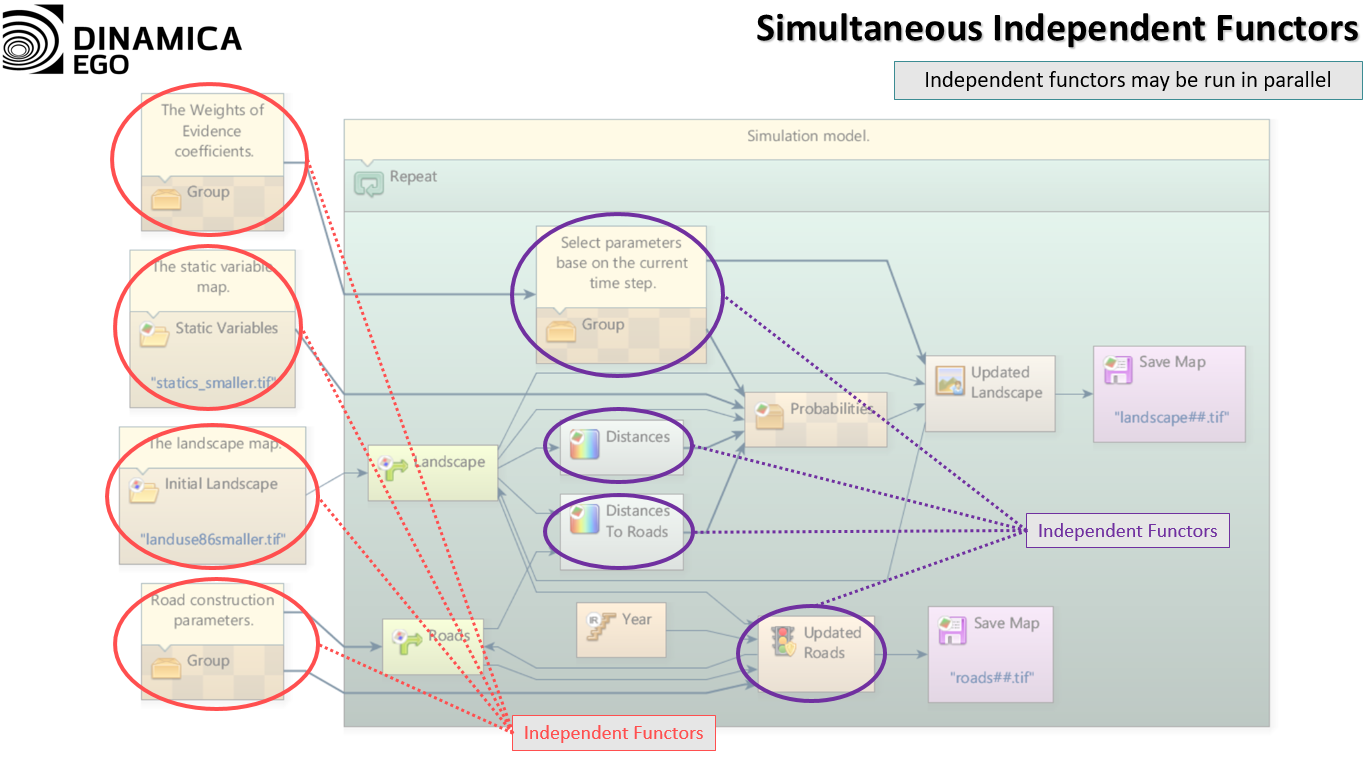

The term Parallelism (in Computer Science) refers to a technique that allow two or more computing tasks to execute at the same time. A key problem of parallelism is to reduce data dependencies in order to be able to perform computations on independent computation units with minimal communication between them. To this end, it can even be an advantage to do the same computation twice on different units.

On modern systems, parallelism can be achieve in two different ways:

• By executing tasks in the same time slice (and pausing the inactive tasks to give an impression of concurrency);

• By having multiple processing units that physically execute tasks simultaneously.

Since modern processor architectures are composed of many cores, the later method strongly surpasses the former in terms of speed and ease of programming. In such systems, programmers must adapt their software to take advantage of Parallelism, taking into account concurrent access to data. To do that, data is usually broken into equal independent slices that are processed in different computing units but a fundamental problem arises when this data is not easily separable or is dependent of many computing iterations. In order to deal with this situation, software developers and architects developed many algorithms and techniques to minimize communication between computing units and data dependency. To this end, it can even be an advantage to do the same computation twice on different units to prevent synchronization.

Why is Parallelism important?

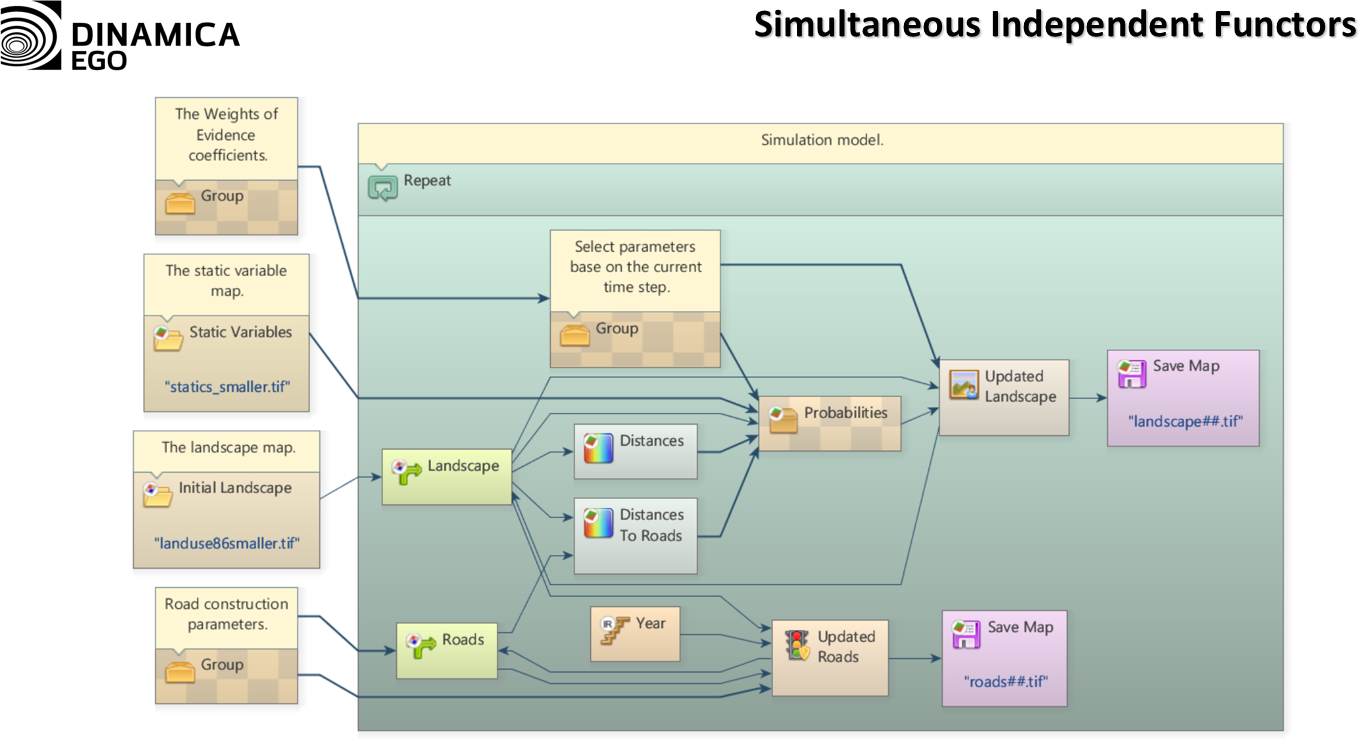

Efficiently using these parallel capabilities is necessary to meet user demands for modelling complex dynamics with large datasets. However, users may be reticent to develop or adapt their current models to take advantage of these parallel environments because such development is often complicated, time-consuming, and error prone.

Graphic computations on a GPU are parallelism!

Besides the general purpose processors, another class of devices like the GPUs are able to offer Parallelism. Such devices can provide a greater level of concurrent operations due to the higher number of processors when compared to traditional CPUs. Since GPU processors are dedicated to certain type of tasks, those devices also need their own set of programming rules and data access.

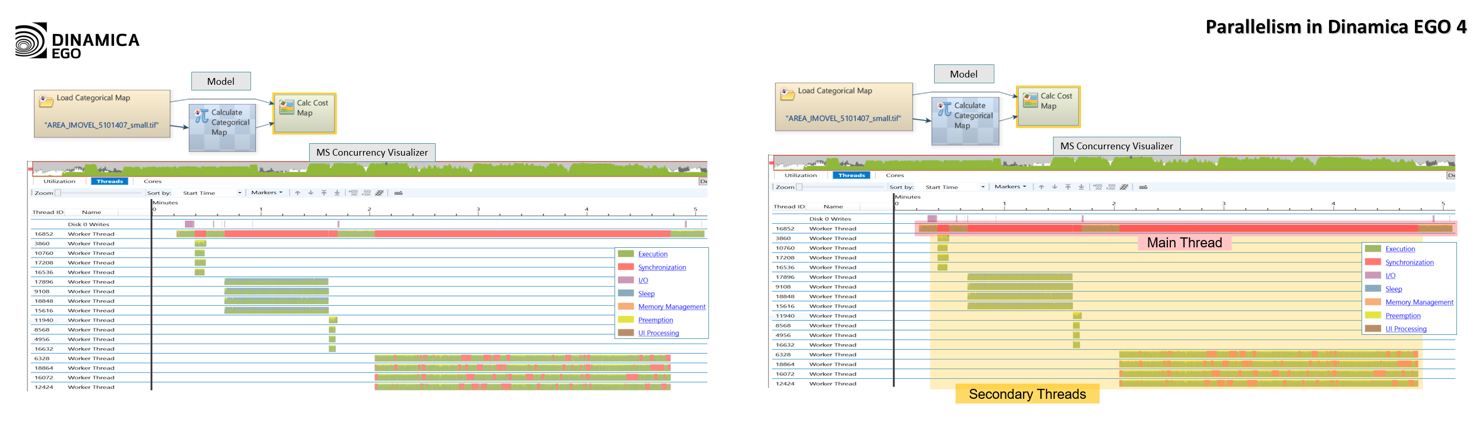

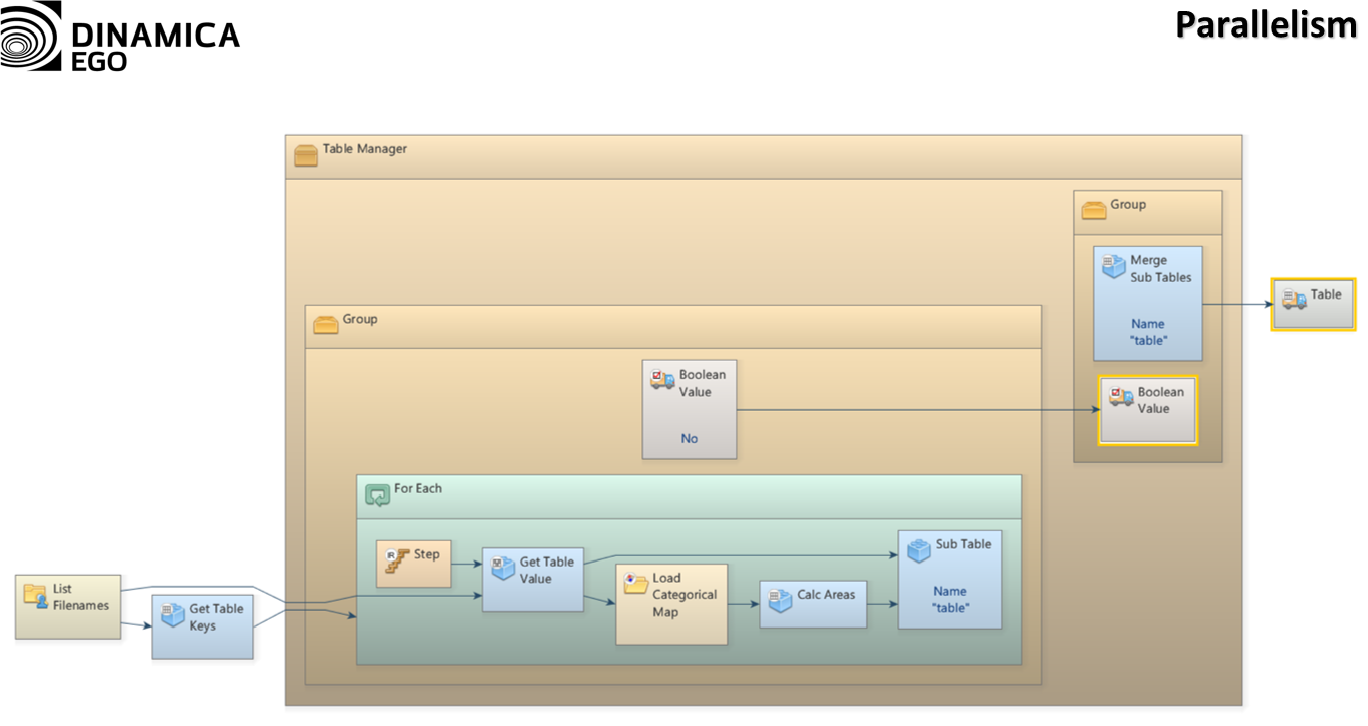

How we change Dinamica EGO infrastructure and make everything as much parallel as possible?

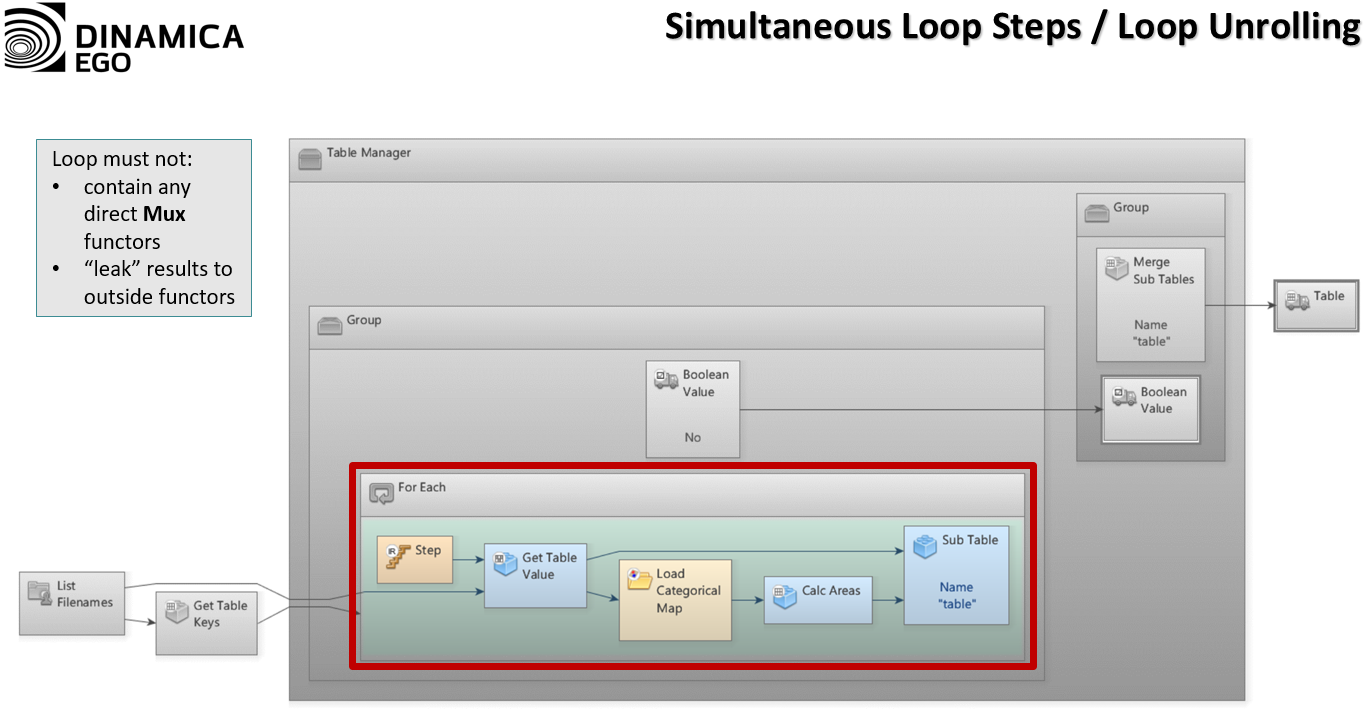

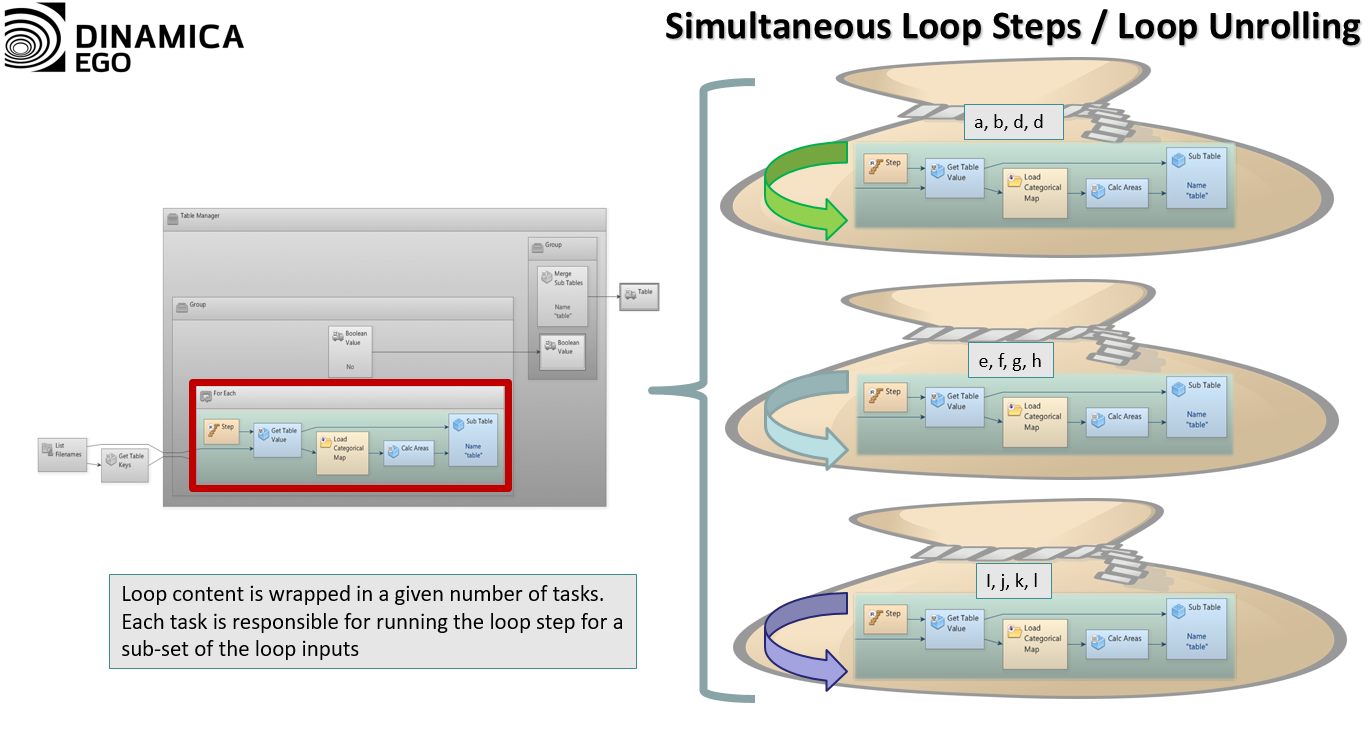

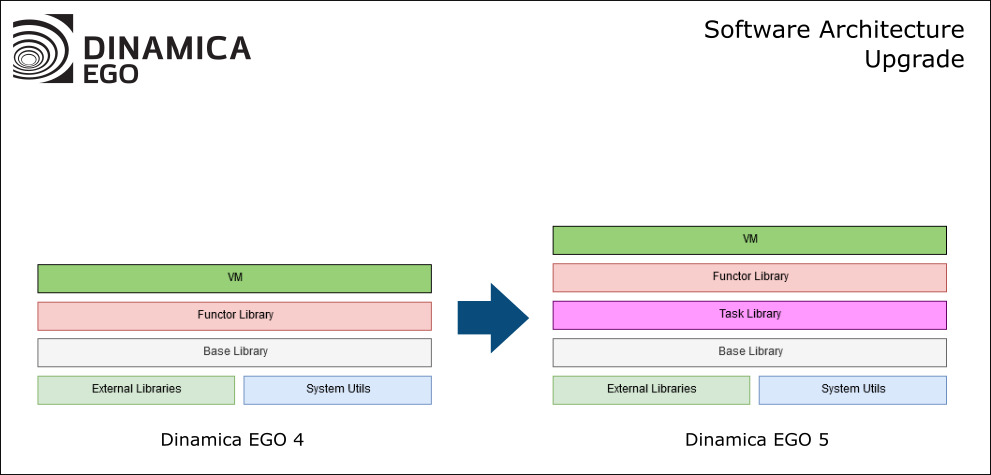

Before version 5, Parallelism in Dinamica EGO was handled by the Virtual Machine (VM) and the Functor Library (FL) directly, without global coordination. This caused uneven distribution of work between computing systems and sub-optional use of the machine resources. The Task Library was introduced in Dinamica EGO 5 to address such problems, by creating a global system for handling and processing work. We restructured the VM and FL sub-systems so that models are now broken into tasks according to computer capabilities and model semantics (i.e., the rules the computer must follow as prescribed by the model). These tasks are distributed to underlying computing systems such as processor cores and graphics processing units (GPU) and the workload is balanced by the use of the work-stealing approach. In version 5, every computing unit is able to manipulate and transform data in parallel (e.g., parts of an image can be read and written simultaneously) and also communicate, synchronize and exchange data with its siblings.

Tasks + Work Stealing in Dinamica EGO 5